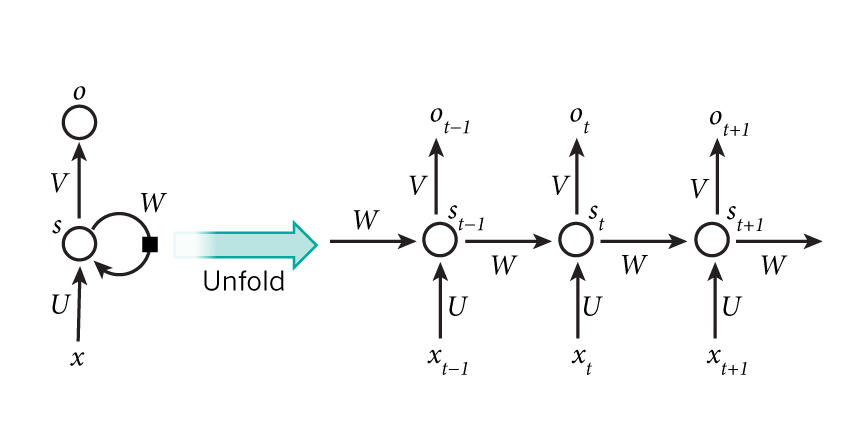

Picture: How we learn words.

Interpretation: Powerful tool

1. disassemble a word stream, from media A: TV

2. disassemble a word stream, from media B: Twitter

3. correlate the streams, and

3.1: read the current discussion,

3.2: write the future discussion

Conclusion: Thought/Discussion Influencing Factors

Awareness is advised, since it will be used for both good/evil purposes.

---

In computer science and information science, an ontology is a formal naming and definition. An ontology compartmentalizes the variables needed for some set of computations and establishes the relationships between them. The word element onto- comes from the Greek ὤν, ὄντος, ("being", "that which is"). There is also generally an expectation that the features of the model in an ontology should closely resemble the real world (related to the object).[3]

The Zachman Framework is an enterprise ontology and is a fundamental structure for Enterprise Architecture, which provides a formal and structured way (methodology to discern) of viewing and defining an enterprise.

Picture: 1. Contextual model, 2. Conceptual model, 3. Information model, 4. Data model

The definition of contextual is depending on the context, or surrounding words, phrases, and paragraphs, of the writing. An example of contextual is how the word "read" can have two different meanings depending upon what words are around it.

A pattern is a discernible regularity in the world - it follows certain rules - observable by analysis.

Any of our senses may directly observe patterns - as the governing rules.

Systems theory is the interdisciplinary study of systems in general, with the goal of discovering patterns and elucidating principles. A central topic of systems theory is self-regulating (governing) systems, i.e. systems self-correcting through feedback.

Taxonomy is the practice and science of classification.

A meronomy or partonomy is a type of hierarchy that deals with part–whole relationships, in contrast to a taxonomy whose categorisation is based on discrete sets. The part–whole relationship is sometimes referred to as HAS-A, and corresponds to object composition in object-oriented programming.[1]

The study of meronomy is known as mereology, and in linguistics a meronym is the name given to a constituent part of, the substance of, or a member of something.

In philosophy and mathematical logic, mereology (from the Greek μέρος, root: μερε(σ)-, "part" and the suffix -logy "study, discussion, science") is the study of parts and the wholes they form. Whereas set theory is founded on the membership relation between a set and its elements, mereology emphasizes the meronomic relation between entities, which—from a set-theoretic perspective—is closer to the concept of inclusion between sets.